This is the forth in the in the series of features in the 1.1 release of BOSCO. The previous posts have focused on SSH File Transfer, Single Port Usage, and Multi-OS Support. All of these features are new in the 1.1 release. But now I want to talk about a feature that was in the previous 1.0 release, but is important enough to discuss again, Multi-Cluster Support. This feature is very technically challenging, so I will start with why you care about Multi-Cluster in BOSCO.

Why do you care about Multi-Cluster?

On a typical campus, the each department may have it's own cluster for their use. Physics may have a cluster, Computer Science has one, and Chemistry may have another. Or a computing center may have multiple clusters reflecting multiple generations of hardware. In either of these cases, users have to pick which cluster to submit jobs to, rather than submitting to which ever has the most free cores.

You don't care what cluster you run on. You don't care how to submit jobs to the PBS Chemistry cluster or the SGE Computer Science cluster. In addition, who wants to learn two different cluster submission methods. You only care about finishing your research.

BOSCO can unify the clusters by overlaying each cluster with an on demand Condor cluster. That way, you only learn the Condor submission method. The Condor job you submit to BOSCO will then be run at whichever cluster has the first free cores for you to run on.

What Is Multi-Cluster?

In BOSCO, Multi-Cluster is the feature that allows for submission to multiple clusters with a single submit file. A user can submit a regular file, such as:

universe = vanilla

output = stdout.out

error = stderr.err

Executable = /bin/echo

arguments = hello

log = job.log

should_transfer_files = YES

when_to_transfer_output = ON_EXIT

queue 1

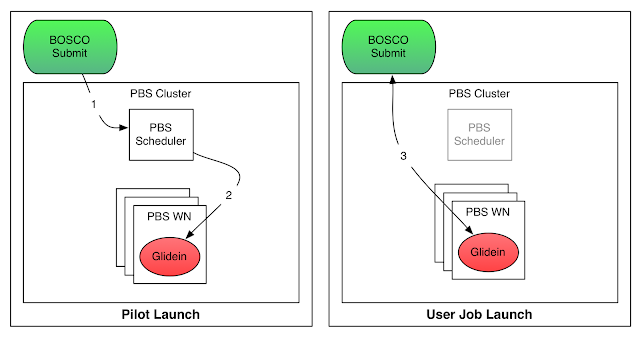

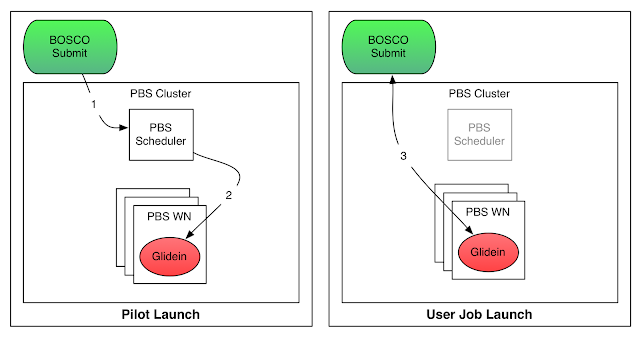

When the job is submitted, BOSCO will submit glideins to each of the clusters that are configured in BOSCO. The glideins will start on the worker nodes of the remote clusters and join the local pool at the submission host, creating an on-demand Condor pool. The jobs will then run on the remote worker nodes through the glideins. This may be best illustrated by a picture I made for my thesis:

|

| Overview of the BOSCO job submission to a PBS cluster |

In the diagram, first BOSCO will submit the glidein to the PBS cluster. Second, PBS will schedule and start the glidein on a worker node. Finally, the glidein will report to the BOSCO submit host and will start running user jobs.

The Multi-Cluster feature will continue to be a integral part of the BOSCO.

The Beta release of BOSCO is due out next week (fingers crossed!). Be watching this blog, and the

BOSCO website for more news.

Leave a comment